1.2 What is Reinforcement Learning?

Good news! We can sum up the core idea of Reinforcement Learning in just one powerful sentence (Brunskill 2022):

But what exactly does that mean? Let’s break it down!

Learning

At its core, learning in Reinforcement Learning occurs through trial and error, where an agent refines its actions based on evaluative feedback from the environment.

Evaluative feedback indicates how good the action taken was, but not whether it was the best or the worst action. Intuitively, this type of feedback can be thought of as learning through experience.

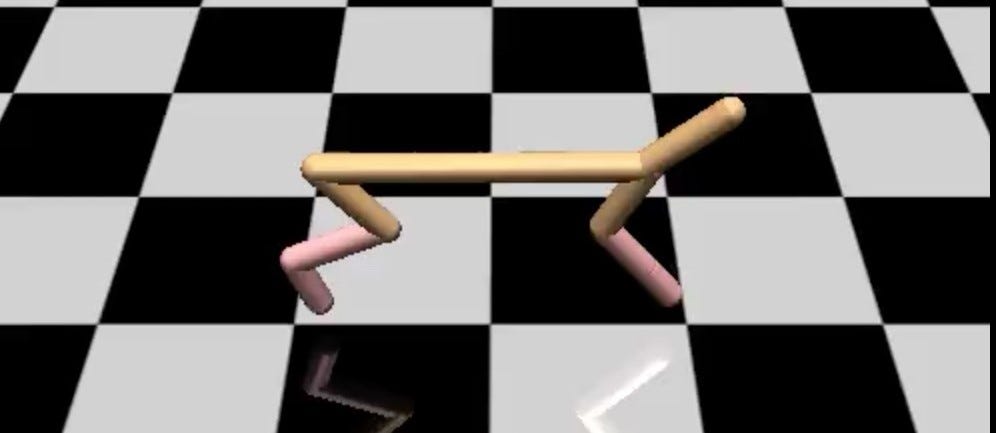

Example: Learning How to Walk

Learning to walk involves trial and error, where feedback comes from the outcome of each attempt—success or falling—rather than explicit instruction. This aligns with evaluative feedback in reinforcement learning, where the agent learns from the consequences of its actions, not direct guidance.

Example: Learning to Distinguish Right from Wrong

Learning to distinguish right from wrong often relies on experiencing the outcomes of decisions and receiving approval or disapproval from others. This reflects evaluative feedback in reinforcement learning, where behavior is shaped by rewards or penalties rather than explicit rules.

Unlike both Supervised/Unsupervised Learning which rely on instructive feedback through gradient-based optimization.

Instructive feedback indicates the correct action to take, independently of the action actually taken. Intuitively, this type of feedback can be thought of as learning from ground truth.

Example: Cheetah

Supervised/Unsupervised learning focus on identifying what makes an image a cheetah by learning patterns from a dataset of animal images. In contrast, Reinforcement Learning is about teaching a cheetah how to run by interacting with its environment (Lecture 10).

“Here’s some examples (images), now learn patterns in these examples…”

“Here’s an environment, now learn patterns by exploring it…”

Optimal

The goal of Reinforcement Learning is to maximize rewards over time by finding the best possible strategy. This involves seeking:

- A maximized discounted sum of rewards, or goal \(G\).

- Optimal Value Functions \(V^{*}\).

- Optimal Action-Value Functions \(Q^{*}\).

- Optimal Policies \(\pi^{*}\).

- A balance between exploration vs. exploitation \(\epsilon\).

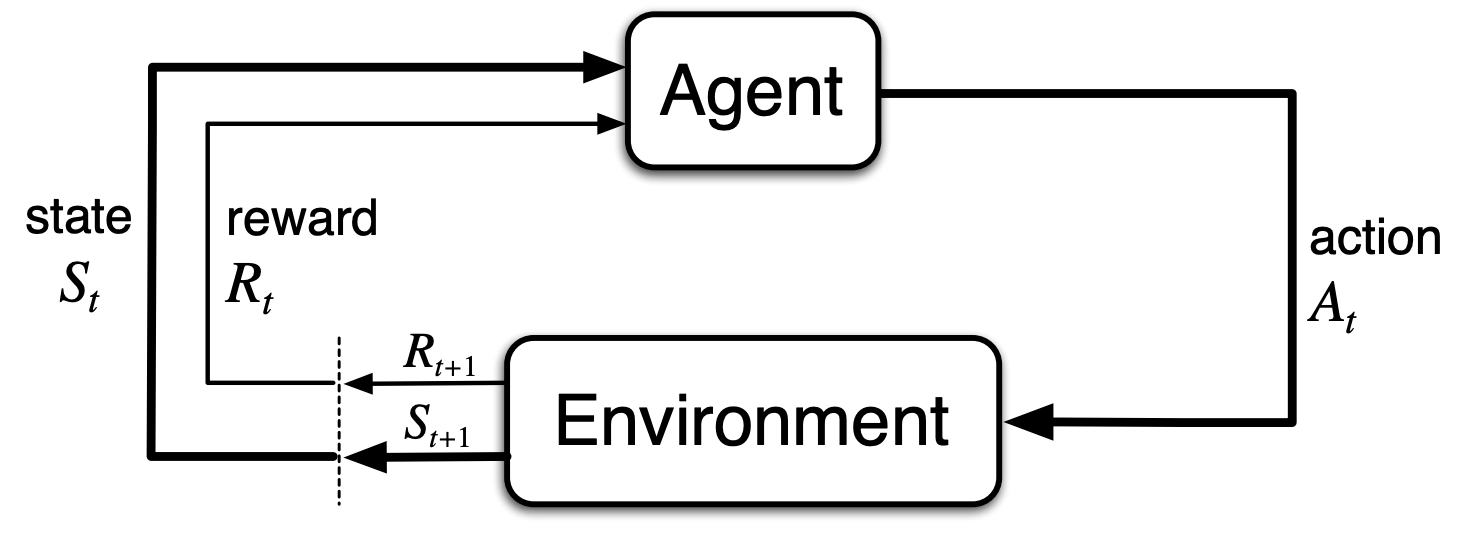

Sequential Decision-Making

Unlike a one-time choice, Reinforcement Learning involves a chain of decisions where each action affects the next.

\(\pi: S_{0}, A_{0}, R_{1}, S_{1}, A_{1}, R_{2}, ... , S_{T-1}, A_{T-1}, R_{T}\)

- Markov Decision Process (MDP) is a formal framework for modeling decision-making.

- The agent selects actions \(A_t\) over multiple time steps, shaping its future states \(S_t\) and rewards \(R_t\).

- Each decision affects not only immediate rewards but also the trajectory \(\tau\) of future outcomes.