7.2 On-Policy Function Approximation

On-Policy Function Approximation

Approximating state values is not sufficient to achieve control.

\[ \hat{V}(s; \mathbf{w}) \approx V_{\pi}(s) \]

We need to approximate action-value functions.

\[ \hat{Q}(s,a; \mathbf{w}) \approx Q_{\pi}(s,a) \]

In doing so, we will incorporate previously learned techniques, such as SARSA from TD learning to devise a learning algorithm.

Because \(s\) represents any state information and \(a\) represents all possible actions.

Mathematical Intuition

Similarly, MSE loss for action values:

\[ F(\mathbf{w}_{t}) = \mathbb{E}_{\pi}[(Q_{\pi}(S_{t},A_{t}) - \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}))^{2}] \]

Similarly, SGD update for parameters \(\mathbf{w}\):

\[ \mathbf{w}_{t+1} = \mathbf{w}_{t} + \alpha(Q_{\pi}(S_{t},A_{t}) - \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}))\nabla_{\mathbf{w}_{t}} \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}) \]

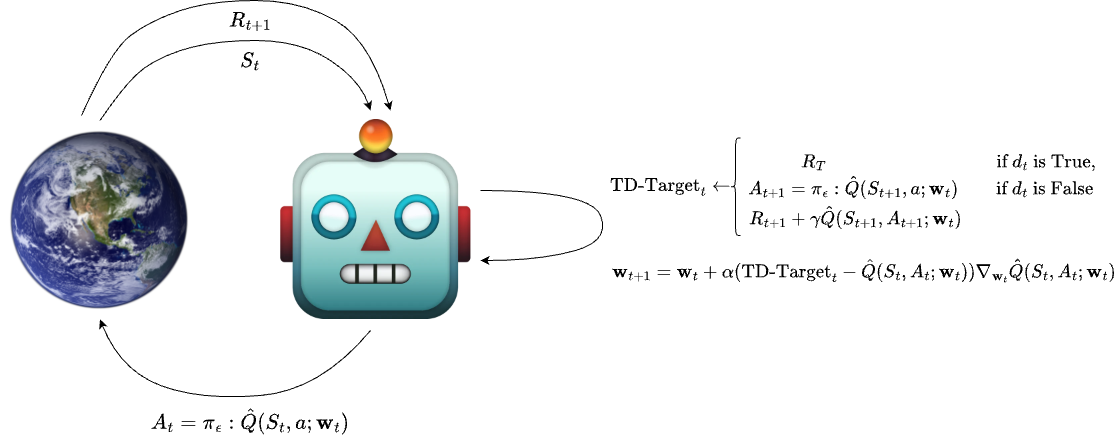

Finally, to implement TD learning, we substitute \(Q_{\pi}(s,a)\) for our TD targets at each step:

\[ \langle S_{0}, \ R_{1} + \gamma \hat{Q}(S_{1},A_{1}; \mathbf{w}_{0})\rangle, \ ... \ ,\langle S_{T-1},R_{T}\rangle \]

Thus, our update equation for TD on-policy approximation:

\[ \mathbf{w}_{t+1} = \mathbf{w}_{t} + \alpha(R_{t+1} + \gamma \hat{Q}(S_{t+1},A_{t+1}; \mathbf{w}_{t}) - \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}))\nabla_{\mathbf{w}_{t}} \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}) \]

On-Policy Function Approximation: Illustration

Pseudocode

Exercise

How can we summarize the update equation in 3 components?

\[ \mathbf{w}_{t+1} = \mathbf{w}_{t} + \alpha(R_{t+1} + \gamma \hat{Q}(S_{t+1},A_{t+1}; \mathbf{w}_{t}) - \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}))\nabla_{\mathbf{w}_{t}} \hat{Q}(S_{t},A_{t}; \mathbf{w}_{t}) \]