11.1 Model-based Reinforcement Learning

In Lectures 5-10, we explored Model-free Reinforcement Learning.

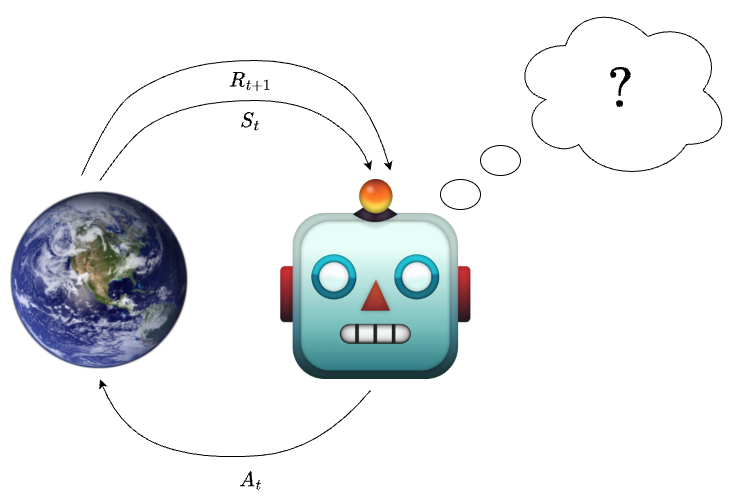

Model-free Reinforcement Learning emphasizes learning directly from interactions with the environment without relying on a model of its dynamics.

Agents need to sample many environment interactions to learn environment dynamics.

\[ P(s^{'}, r| s, a) \]

Exploration is blind without a model of environment dynamics. Model-free methods focus on immediate rewards.

But what if agents could predict the outcomes of their actions without directly interacting with the environment? Could this lead to more efficient learning?

Think about how we humans often plan.

For example, imagine you are about to graduate from GW. You could take two possible actions:

The outcomes of each choice are not immediately visible — you can’t just try both and “reset.” Instead, you have to simulate in your head what the future might look like based on your prior knowledge and expectations:

- What career growth might the consulting job bring?

- What opportunities could the PhD open up?

- How long will each path take?

Unlike trial-and-error learning, where feedback comes directly from experience, here you are relying on a mental model of the world to forecast what might happen and make a choice.