11.2 Monte Carlo Tree Search (MCTS)

Model-based RL leverages environment dynamics to plan actions.

WarningProblem

How can we focus our search on the most promising actions to make planning more effective?

NoteReal Life Example 🧠

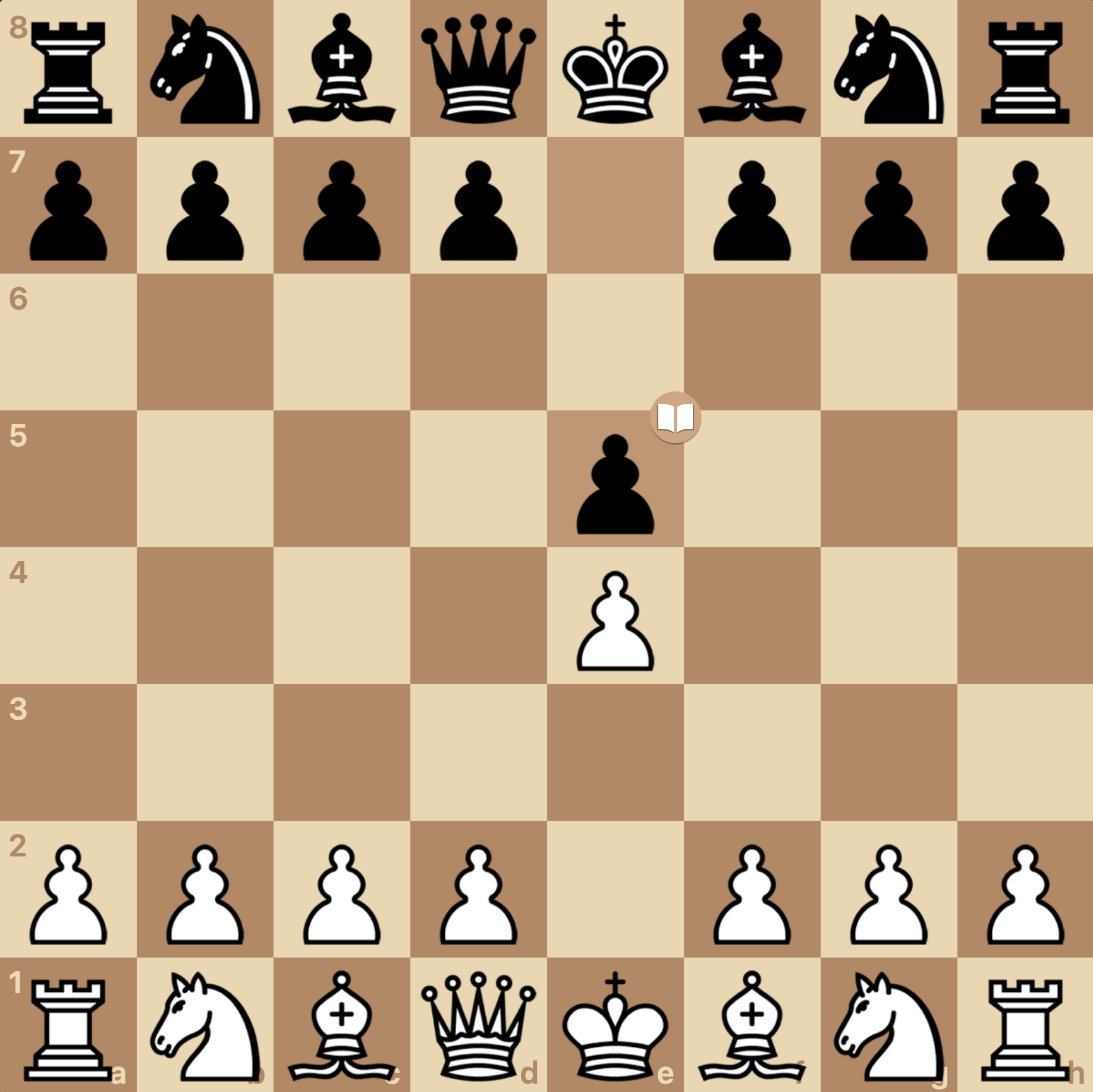

Suppose you are playing a game of chess and arrive at a complicated position:

- \(S\) — The current board position in front of you.

- \(A\) — Several possible candidate moves you could make.

- \(R\) — You won’t know the true reward immediately — but you can simulate outcomes by imagining how the game might unfold.

- \(S'...\) — For each action, you mentally “roll out” future positions by predicting your opponent’s possible replies and continuing a few moves ahead.

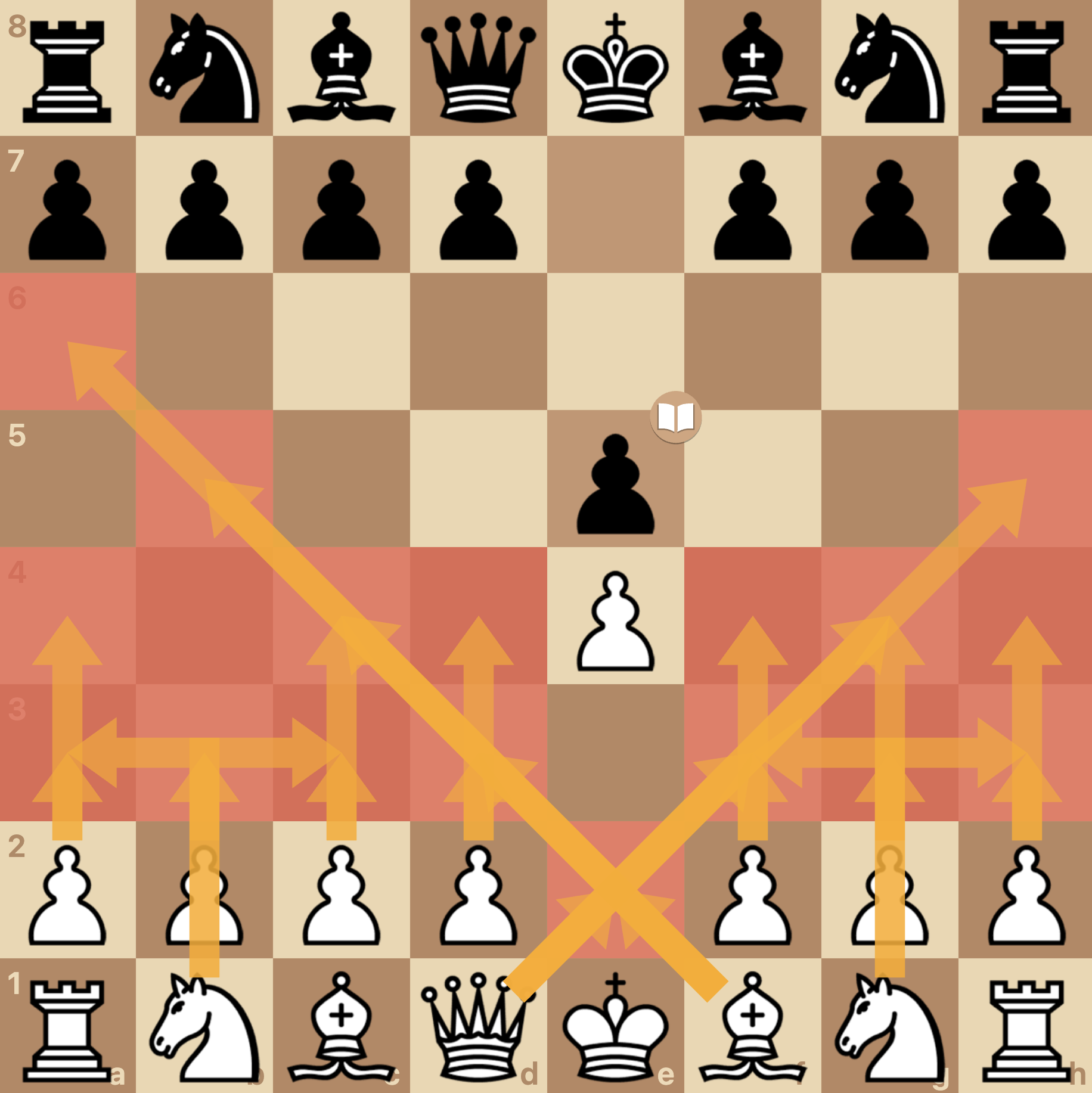

You don’t need to explore every line to the very end of the game — instead, you simulate a sample of promising moves, update your estimates, and bias future simulations toward the moves that look strongest.

TipSolution