5.3 On-Policy Monte Carlo

We need a better method of establishing control, or approximating optimal policies \(\approx \pi_{*}\), in associative environments without relying on unrealistic assumptions.

On-Policy learning evaluates or improves the policy \(\pi\) that is used to make decisions.

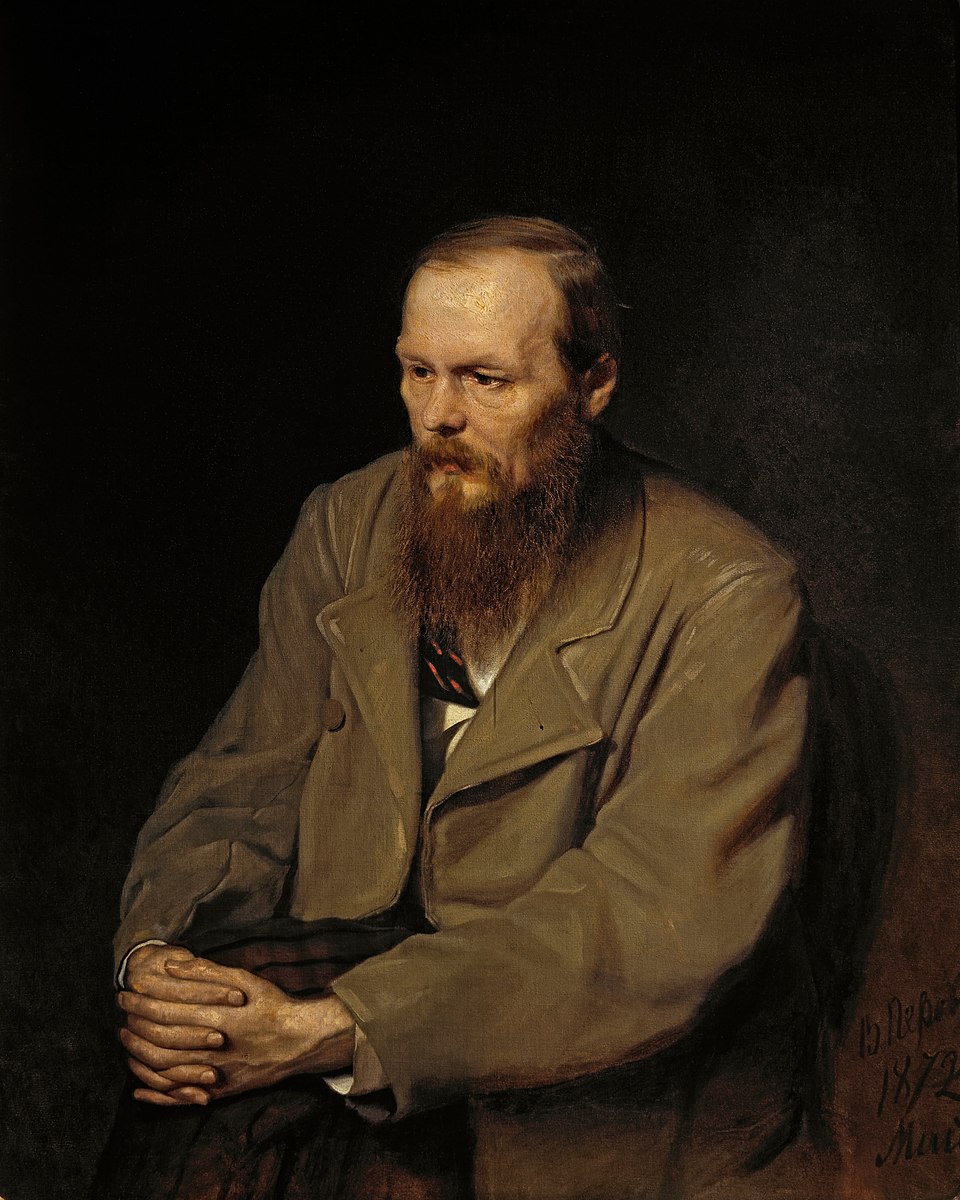

“It is better to go wrong in one’s own way than to go right in someone else’s.”

— Fyodor Dostoevsky

Like Dostoevsky suggests, you stick with your current way of doing things — even if it’s imperfect. You learn from your own behavior — your own actions \(a\) taken in states \(s\), based on the current policy \(\pi\) — and improve over time through authentic experience.

For example:

Is it better to try your own coding solution and learn from mistakes, rather than copying someone else’s code?

How does observing the outcomes of your own actions help you improve your policy over time?

How can we leverage Monte Carlo’s learning rule to approximate the optimal policy \(\approx \pi_{*}\), without having to rely on the unrealistic assumption of an initial random state and action \((s,a)\)?

Recall, \(\epsilon\)-greedy methods for balancing exploration and exploitation.

\(\epsilon\)-soft policy

These policies are usually referred to as \(\epsilon\)-soft policies as they require that the probability of every action is non-zero for all states and actions pairs, that is:

\[ \pi(a|s) > 0 \quad \text{for all} \quad s \in S \quad \text{and} \quad a \in A(s) \]

To calculate the probabilities of selecting an action according to the \(\epsilon\)-greedy policy \(\pi(a|s)\), we use the following update rule:

\[ \pi(a|s) \gets \begin{cases} 1 - \epsilon + \frac{\epsilon}{|A(S_{t})|} & \text{if} \quad a = A_{t} \quad \text{(exploitation)} \\ \frac{\epsilon}{|A(S_{t})|} & \text{if} \quad a \neq A_{t} \quad \text{(exploration)} \end{cases} \]

By using \(\epsilon\)-soft policies, we ensure that every action \(a\) has a non-zero chance of being explored — even while following our current policy \(\pi\).